Problem

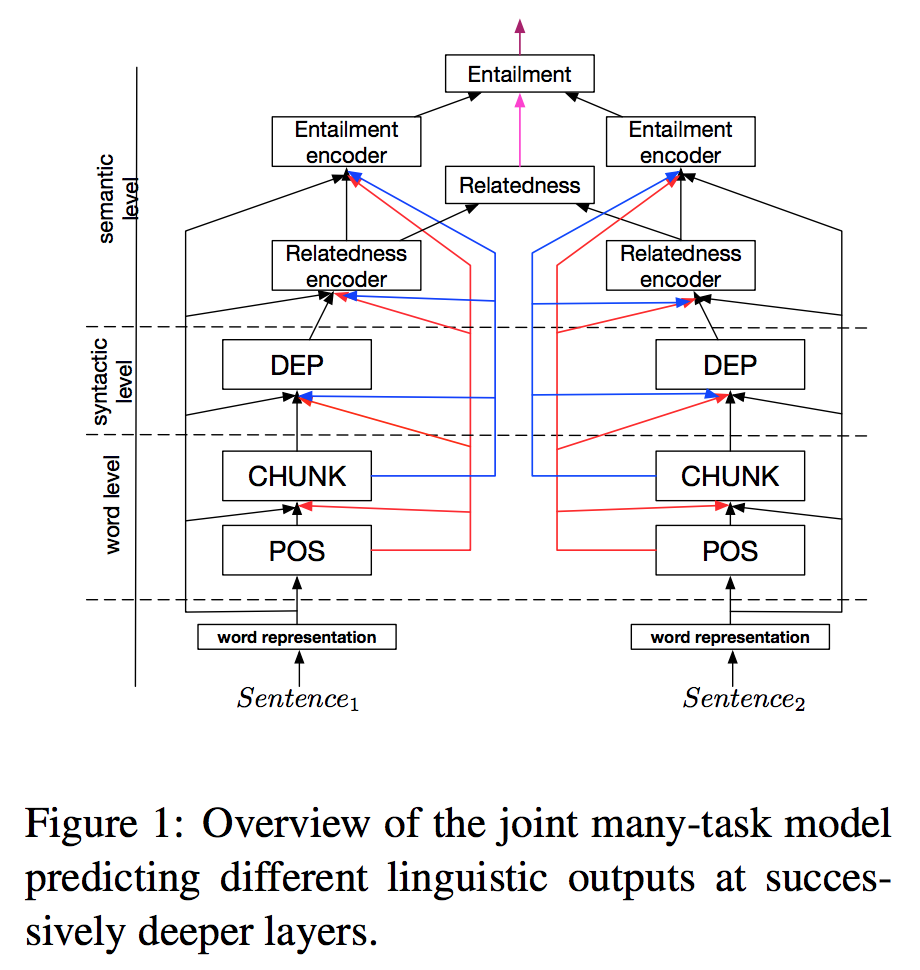

Perform multi-tasks in a hierarchical manner. Train a multi-layer model for multitasks. Different layers handle different tasks, from morphology, syntax to semantics.

Key Ideas

- Different layers handle different tasks.

- Low-level layer handle easy task, high-level layer handle difficult task.

- Tasks are stacked: POS - CHUNK - DEP - Relatedness - Entailment.

- Train tasks sequentially, from easy to hard; add regularization term to prevent significant catastrophic forgetting.

Model

-

Structure

- Each task utilizes one layer BiLSTM

- n + 1 layer dependends on n-th layer output.

-

Data

Use different existing labeled training data

-

Training

Train tasks in sequence: POS, CHUNK, DEP, Relatedness, Entailment (from low-level to high level). Add successive regularization: make the previous layer output not change too much after training current layer.

Performance

- Joint model performs better than single task models.

- Joint performance are comparable with existing single models.

- Sequential training is better than shuffle.

- Regularization is useful for tasks with small amount of data.

Comments

- Multi-task, task hierarchy are useful.

- Train from bottom to top.

- We can learn task dependency structure.

- We can utilize better techniques for catastrophic forgetting. Besides, maybe sampling to improve tasks with limited data can help.

Reference

Paper link: https://aclweb.org/anthology/D17-1206